AI Impact on Gaming and Media Tooling

Dan Moskowitz Oct 28, 2025

Automation as a tool has been part of gaming history for several decades, if you count the Nimatron in 1940, or perhaps even centuries, if you count the original Mechanical Turk in 1770 (as long as you ignored the human under the table pulling the Turk’s strings). The more recent surge in AI within the games industry has created much hype but also caused some heartburn from both industry participants (“Will I still have a job in 3-5 years?”) and players (“I don’t want to play ‘AI slop’”).

The reality is that gaming has always been driven by interactive storytelling and connections to the player. Automation doesn’t change that. It may lead to more ‘slop’, but picking through cash-grab shovelware is already something players are used to (anyone who has used Switch’s eShop can attest). And on a micro-scale, within a studio, it will make some jobs redundant in much the same way Unity and Unreal did in the early days of engine outsourcing. But on a macro-scale, these new AI tools are going to drive so much efficiency that more creators can make more games. It will be a net boon to the industry.

This report looks at the potential impact (primarily economic) of AI in each category of game development where real innovation is happening today.

The stages of game development

First, a brief overview of the art and science of game-making.

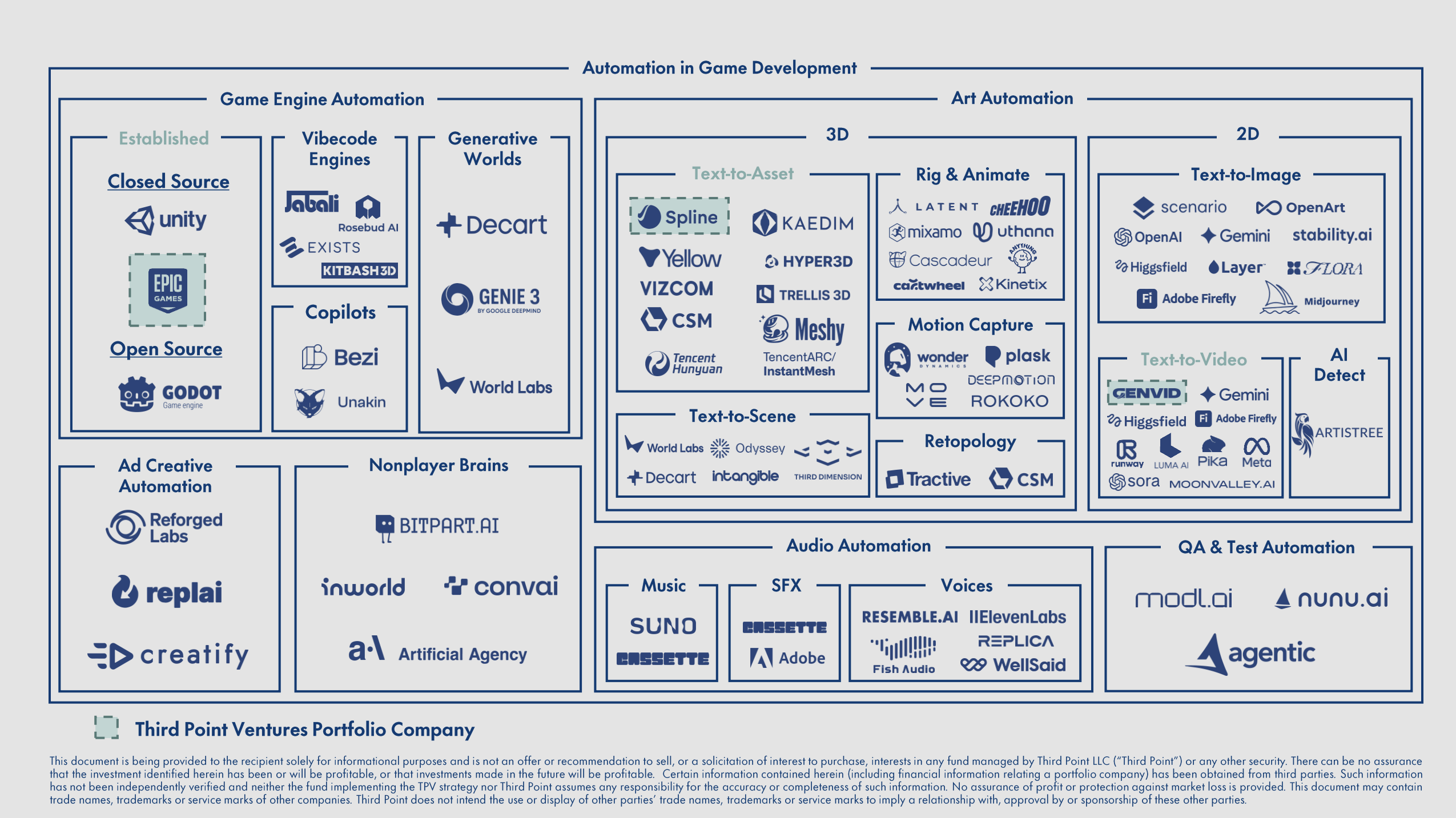

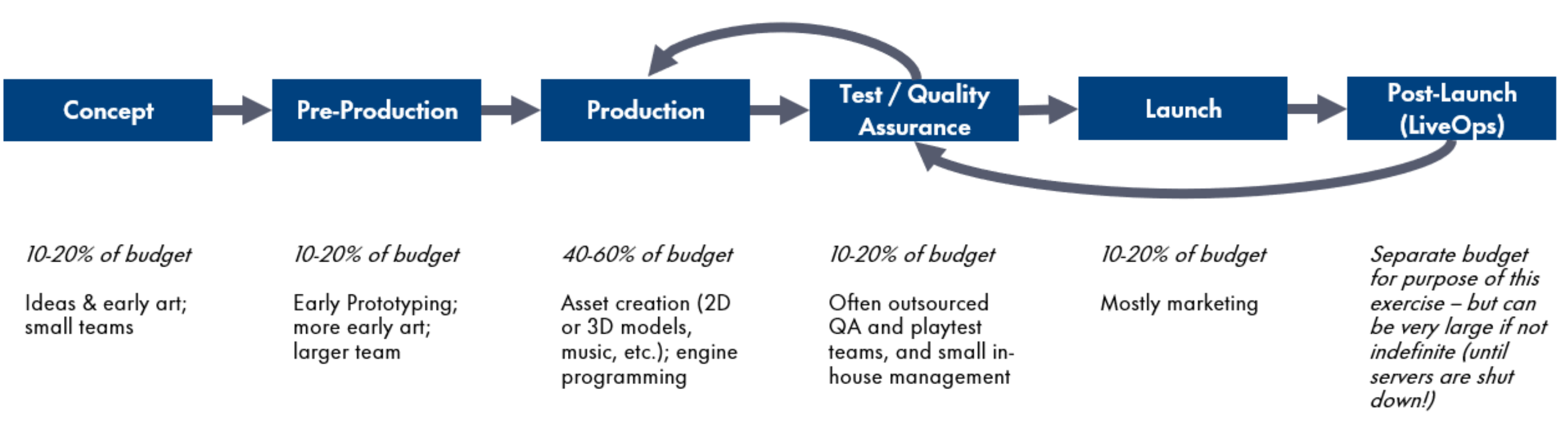

Figure 1: The six stages of game development. Source: Third Point Ventures analysis.

We surveyed 50 budget-owning producers and creative directors at game studios of all shapes and sizes to better understand how much budget is allocated to each stage of game development. This was necessary to understand which near-term AI innovations are most likely to drive the highest cost savings.

Figure 2: Overview of survey participation in terms of studio type and total budget. Source: Third Point Ventures analysis and AlphaSights.

A game starts in the Concept phase (10-20% of final budget). This is where very small teams hash out early ideas. Think: whiteboarding, small writers’ room, and very rough art meant to convey themes and ideas.

Next is Pre-Production (10-20% of budget). More art, and perhaps early prototyping of some mechanics, are created by a larger team.

After Pre-Production is Production (40-60% of budget). A team or teams of programmers writing production-grade code inside of their engine of choice, alongside artists creating game-ready 2D or 3D assets and music.

Quality Assurance (QA) and Playtesting (10-20% of budget) occur on “slices” of the game. If developers had to wait until the final game was done before testing it, then nothing would ever get shipped on time. Instead, small chunks of game are constantly being tested and the feedback incorporated back into the development cycle. This process occurs on a spectrum: great developers are masters of iterative design and feedback, while others are not as good at it, or are under such immense budget pressure that they simply can’t afford to do this well (and this is what usually leads to game-breaking bugs shipping in release – this is a big deal – more on that later).

After the development and testing work is complete, the game enters Launch (10-20% of budget). It is important to keep in mind that there are wildly variable Launch budgets. Some of the largest AAA game marketing costs can be as large as the entire development cost. For example, some estimates for Grand Theft Auto V put the development budget at $137 million and the marketing budget at $128 million. For the average studio, marketing costs will be big, but not that big. For the smallest studios and indie breakout hits, marketing budgets are almost nil, and word-of-mouth and earned press and virality are all that matter.

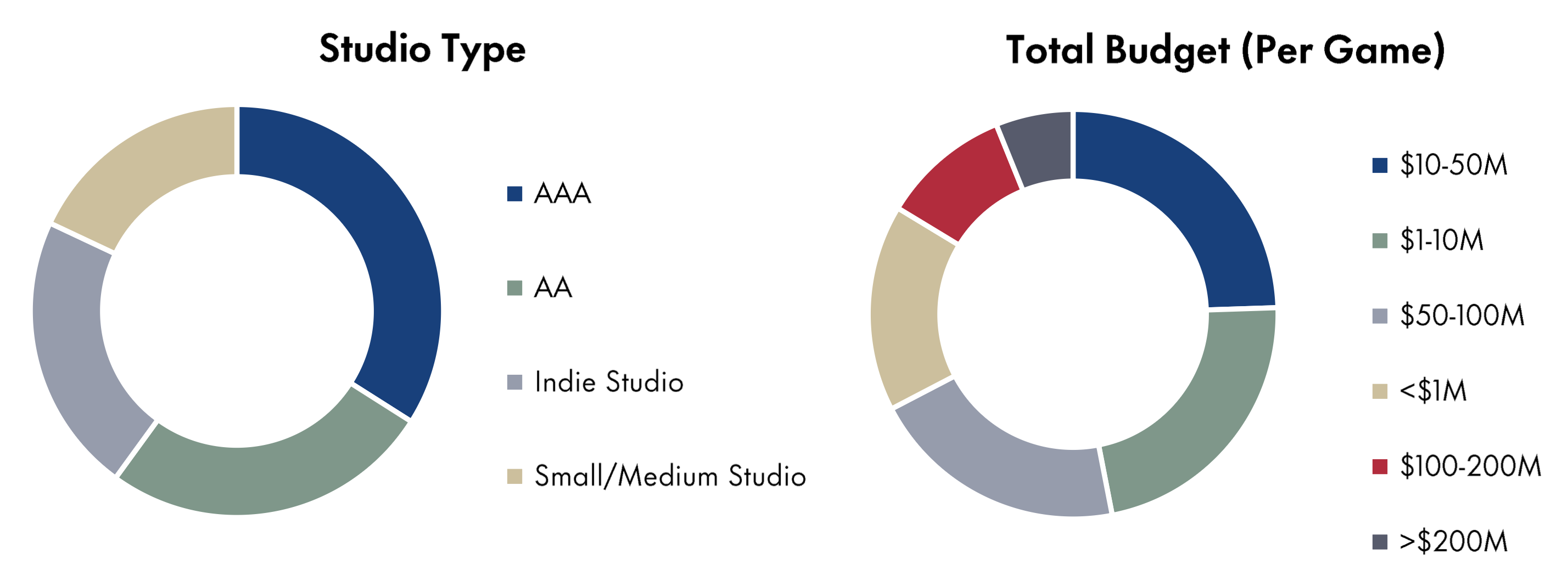

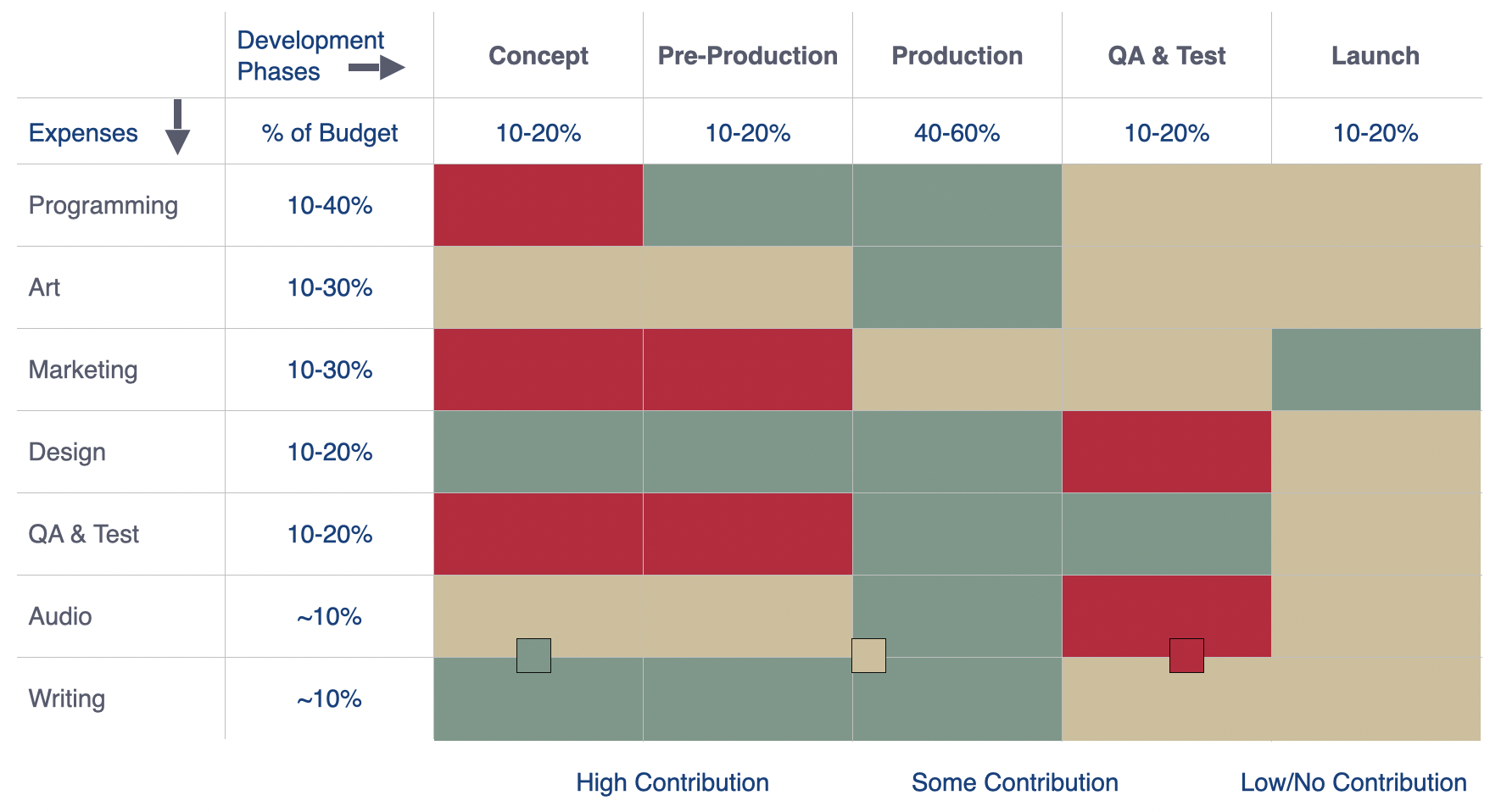

Figure 3: Breakdown of game budgets according to survey participants. Source: Third Point Ventures analysis and AlphaSights.

AI Applications in Each Phase of Game Development

Programming (Percent of Budget: 10-40%)

The selection of a game engine typically happens in Pre-Production and is one of the most consequential decisions a team will make (changing the engine when they have already created much of the code and systems is very difficult).

Most modern developers are going to choose either Unity or Epic Unreal Engine. In 2024, these two engines accounted for ~80% of all games released on Steam versus only 10% built on custom engines.1 Interestingly, over 40% of Steam revenue earned in 2024 was from games made on custom engines. This discrepancy is because nearly all custom builds are for large AAA studios, famously including EA (Frostbite, which was developed for EA’s Battlefield series but is now used for many EA properties), many Nintendo properties including the recent Zelda games, and Rockstar (GTAV was built on their proprietary engine called RAGE).

AAA developers historically built in-house engines for several reasons, but the biggest ones tended to be cost-savings (avoid paying royalties to the engine provider) and full control of the tech stack to support edge-case requirements. But there is a trade-off because total costs may be higher for custom builds, and the complexity of a custom build is not always easy to manage with in-house technical talent. As one EA developer phrased it in a 2019 Kotaku interview, “Frostbite (EA’s engine) is … a Formula 1. When it does something well, it does it extremely well. When it doesn’t do something, it really doesn’t do something.”2

The cost-benefit curve has shifted in recent years, and more AAAs are abandoning their proprietary engines. The reality is unless the custom engine is necessary for specific game requirements, those dollars spent reinventing Unreal Engine are better spent elsewhere. The largest publishers such as EA may continue to work on their own engines, but lesser-resourced studios will continue to migrate.

Figure 4: A few examples of upcoming game releases where the developer has publicly disclosed shifting engines from in-house to Unreal.

Unreal Engine and Unity have dominated this market for many years. This is for good reason. Unity has historically offered the best suite of monetization tools for mobile, while Unreal offers amazing tools for out-of-the-box photorealism at the cost of being heavier-weight and harder to navigate for certain teams. Godot, the popular open-source engine, has also gained share among studios that care about transparency and avoiding lock-in (especially after Unity’s much-maligned 2023 announced pricing changes that they ultimately undid after industry pushback).3 4

Near-Term Outlook

All that being said, all three engines have been around for a long time (Godot was officially open-sourced in 2014; Unity came out in 2004; Epic, 1998). There has not been new competition in game engine technology for a long time. That is likely to change in a post-AI world. Several companies have been founded recently to re-imagine the game engine as an AI-native experience. These include:

Short-term:

“Vibecoding-first” platforms such as Jabali, Rosebud and Kitbash in which the code itself is mostly generative, with either imported assets, or GenAI assets, or both.

Copilots for Unity, Unreal, or Godot. There are companies such as Bezi and Unakin that are building assistants to offload much of the manual work of programming a game, designed to work within the engine itself. But the engines are building their own copilots, too.

Long-term:

Completely generative worlds such as the glimpses shown in demos by Genie 3 (Google), World Labs and Decart. The Decart Minecraft demo is particularly neat: it generates pixels on the fly that represent “Minecraft” … but there are no hardcoded logic or static rendered assets on the screen.5 To the player interacting with the “Minecraft” world, however, there is no difference.

Several research teams are experimenting with generating different genres of games.

Expect copilots and vibe coding to take over most of the programming tasks of an engine in the very immediate future. In the long term, generative worlds may take more share from the existing engines, but that depends on whether they can (1) offer fine-tuned control, not just prompt retries, and (2) drastically reduce cost.

A Note on the Cost Curve of GenAI: Why Unity and Unreal Engine are Here to Stay

With the recent release of Google DeepMind’s Genie 3 demo, there has been much discussion about whether the era of the traditional game engine is almost at an end. Today’s game developers are painstakingly creating 3D assets (more on that later), building textures to render, and programming logic in a physics-based game engine. Genie 3’s world context memory and real-time simulation of object interactions allow it to create something similar a game without those intermediate steps. Groundbreaking though it may be, Genie 3 is not likely to fit the cost curve necessary to replace traditional tools for several years, if ever.

As an example, a typical 20-hour AAA single-player campaign runs at 30 frames-per-second, with about 20% of time spent paused or in menus. That is roughly 1.7 million novel frames. Genie 3 pricing is not public, so we can approximate based on Veo 3 (Google’s landmark text-to-video engine). Veo 3’s retail API price is $0.50 to $0.75 per second at 24 frames per second (“fps”), or 2.08¢ to 3.13¢ per frame. At those rates, generating 1.7 million frames costs $36,000 to $54,000 per player per 20-hour playthrough.

If Genie 3 cost the same to run as Veo 3 (Genie 3 is almost certainly more expensive than Veo 3), that is your per-player operating expense. At a $70 retail game price, you lose tens of thousands of dollars on every copy sold.

Now fast-forward: Assume infrastructure costs fall 30% per year. By 2035, that 1.7 million-frame session would still cost $1,000 to $1,500 per player. Break-even with today’s $70 price point is approximately 2043 under these optimistic assumptions. And this ignores multiplayer cost considerations, higher frame rates (double the cost at 60 fps?), and again the fact that Genie 3 ≠ Veo 3.

There may be some step-function development in either software or hardware that pulls this timeline forward, but more likely is that the game developers of the near future will use a combination of AI and game engines to shorten parts of their development cycle or create new experiences. The future is a combination of tools, not a wholesale replacement.

Art & Animation (Percent of Budget: 10-30%)

“Art” encompasses everything from 3D or 2D character modeling, to model rigging, animation (motion capture and/or manual hand-keying), environment art, rigid object modeling (such as weapons, environments), the UI (User Interface – buttons, fonts, etc.), VFX (Visual Effects – explosions, flames, weather), lighting and more. Some of this is handled by the game engine; more still requires painstaking handiwork.

This is an area where much innovation is already happening and it was also one of the first applications for GenAI with Dall-E, which was the first text-to-image model using transformers. Stable Diffusion, Runway, Luma, and many others soon followed. Many professional game developers have already incorporated GenAI into their art pipelines. In a 2024 GDC survey, 16% of responding game developers said they were using similar tools.6 Expect this number to go way up. I had conversations with studios in 2023-2024 where I was told that AI was being used for concept art but was not good enough to incorporate into in-game assets, let alone go straight to production. That is changing rapidly. Activision recently confirmed their use of AI for in-game assets7 and others are sure to follow if they are not already doing so.

Generative Video

One of the most significant challenges in generative video is achieving consistency across frames. Consistency refers to the model’s ability to render characters, objects, and environments identically throughout a video sequence or across multiple outputs. Most generative models, particularly diffusion and autoregressive models, are inherently probabilistic, generating each frame by sampling from a distribution based on the context of preceding frames and the initial prompt. While these models often succeed at preserving broad motion continuity (e.g., a character walking across the screen), they struggle with maintaining fine-grained visual coherence. Small changes to a prompt or attempting to iterate on a generation can unintentionally alter critical visual elements, revealing limitations in current control mechanisms.

Control is another major limitation in current generative video models. In traditional Hollywood or AAA game development pipelines, experienced VFX artists demand precise, frame-level control over every visual element. In contrast, today’s leading generative models often rely heavily on prompt engineering and iterative re-generation, offering limited guarantees about output fidelity. Even when using reference images to guide style or composition, the lack of temporal and visual consistency (as discussed above) makes outcomes unpredictable. Tools like inpainting provide some local editing capabilities, but these are often constrained by context-awareness and do not scale well to complex scene or character edits over time.

Near-Term Outlook

While video generation models are limited to sequential 2D frame prediction, without an internal representation of spatial context or scene geometry, most will continue to struggle with both consistency and control. Instead, some of the more promising platforms may use clever techniques that give back some of the controls you would otherwise expect only from full 3D mesh-based content. For example, tools like Moonvalley enable limited viewpoint shifts. Genvid, a TPV portfolio company, gives creators the ability to create long form generative content with strong character consistency without needing to generate 3D meshes.

One promising avenue involves integrating a true 3D framework into the generation process. Current video models typically generate 2D imagery that suggests a 3D scene – e.g., a photorealistic person drinking coffee – but without an underlying volumetric or mesh-based representation. An alternative approach would begin with a fully defined 3D scene composed of structured models and then generate photorealistic imagery atop that scaffolding. This method would enable granular control over elements like character pose, camera movement, and environmental layout. That more closely aligns with how traditional 3D animation pipelines operate. Companies like Intangible, an a16z Speedrun cohort member, are exploring this hybrid paradigm as a way to merge the flexibility of generative models with the precision of 3D scene composition.

Complementary to companies like Intangible, which focus on 3D scene control, are emerging tools focused on generative animation – a long-overdue evolution in how animated behavior is created for 3D characters.

Today, animating a 3D character remains a highly manual and time-intensive process. Tools like Mixamo have made it easier for artists to apply standard animations (e.g., walking, crouching, jumping), but customization remains a challenge. Achieving realistic, scene-specific motion typically requires motion capture sessions or days of manual keyframing, which means adjusting bones and constraints frame by frame using tools like Blender. And this comes after the prerequisite step of rigging: the complex process of aligning a skeletal structure to the mesh to make it animatable.

Newer platforms such as Uthana are aiming to change this tedious process by automating significant portions of the animation pipeline. Rather than relying solely on fixed animation templates, generative animation systems use AI to produce fluid, responsive character motion based on scene context, environment interactions, and narrative cues. The goal is to enable characters to move more naturally within a scene by adapting to surfaces, props, and player interactions in real time without requiring animators to touch every frame by hand.

Text-to-3D

To integrate text-to-3D generation into professional pipelines, whether in game development or Hollywood VFX, models must significantly improve mesh quality, specifically topology. While current tools like Meshy and Common Sense Machines can generate visually compelling 3D models from text or reference images in a fraction of the time it would take to model manually, the resulting geometry is not yet production-ready.

Topology refers to the polygonal structure – triangles or quads – beneath the rendered surface. Though a model may appear photorealistic in a still frame, attempting to rig and animate it often reveals major structural flaws. Poor joint deformation, inaccurate skin weights, or collapsing geometry (e.g., an arm movement causing the head to distort) are common symptoms of bad topology.

Figure 5: Left to right: model generated in Meshy with texture; underlying triangle mesh; imported to Blender and converted to quad mesh - did not do any retopology - notice how unclean this zero-shot quad looks. It would not work as a production asset without manually fixing the mesh (hours of work).

Near-Term Outlook

Most current models treat 3D generation as a shape-then-mesh process: they learn to generate volumetric forms or implicit surfaces, and then attempt to convert those into polygonal meshes. This surface-first architecture explains why initial renders often appear clean and detailed, while the underlying mesh is fragmented, non-manifold, or topologically incorrect.

Producing a clean, animation-ready mesh requires solving a highly constrained problem. Unlike surface rendering, topology is a closed-grid system: every vertex and edge must connect logically to produce a watertight, deformable structure. Small errors, such as a misaligned vertex or a flipped normal, can cascade into rendering artifacts or broken animations. Compounding this issue is the lack of large, labeled datasets for training mesh generation models, particularly across varied topology styles (organic versus hard-surface, low-poly versus high-res).

Despite these limitations, several strategies are emerging:

Manual Cleanup + Human-in-the-loop: Companies like Kaedim offer AI-assisted 3D asset creation augmented by human artists. While the initial model is generated via AI, topology is manually refined before delivery. This hybrid model aligns well with existing outsourcing workflows common in large studios.

AI-enhanced Editing Tools: Platforms like Spline, a TPV portfolio company, are blending Canva-style UX with native AI tooling. By combining fast generation with editable geometry, artists can iterate more quickly.

A more transformative solution lies in automating retopology, the manual process artists use to clean and reconstruct a mesh. Retopology is time-intensive and often regarded as one of the most tedious tasks in 3D asset creation. If AI can automate this step reliably, it would dramatically reduce the time from concept to usable asset.

Retopology is uniquely difficult for AI because it involves structured, rule-based geometry generation, not just plausible shapes. Errors propagate easily, and solutions must be highly context-sensitive. However, companies like Tractive are actively developing AI-powered retopology tools aimed at solving this problem.

If successful, these tools could bridge the gap between fast generative 3D and production-ready assets, which would unlock immediate value from upstream models like Meshy or Common Sense Machines.

Audio (Percent of Budget: ~10%)

Audio in games spans music, sound effects, and spoken dialogue – and in recent years, AI-generated voices have seen rapid improvement. As recently as 2022–2023, most leading AI voice models still exhibited telltale signs of synthetic speech, such as unnatural inflection or uncanny timing. Today, state-of-the-art models can sometimes fool even discerning listeners, and by 2026 or 2027, human-indistinguishable voice synthesis is likely to be commonplace.

While the technical milestones are impressive, the more pressing challenges are legal and ethical. In 2024, SAG-AFTRA (the largest union representing performers and media professionals) filed an unfair labor practice charge against Epic Games. The issue: Epic allegedly used an AI clone of Darth Vader’s voice in Fortnite, even with permission from James Earl Jones' estate. SAG-AFTRA’s argument was not about copyright or likeness rights, but labor jurisdiction: Darth Vader is considered a “union-covered” role, and under union rules, any AI-generated performance must still go through union channels.

This case underscores a growing tension. Voice actors, particularly those working in games, advertising, and corporate media, face a real threat to their livelihoods. As one voice actor recently stated in an industry forum: “Lost my biggest voiceover client (~$35,000 a year in various scripts across their various business units) to AI at the beginning of this year.”8 While anecdotal, stories like these are increasingly common across the industry.

The path forward will likely involve a hybrid of AI and human performance, but there are still speedbumps to navigate there despite the technology being ready today.

Near-Term Outlook

There are already several examples of studios using voice cloning on actors and characters who have already passed. Fortnite’s Darth Vader AI clone was already mentioned. Much earlier than that, in 2022, the company Resemble AI cloned Andy Warhol’s voice for the Netflix documentary The Andy Warhol Diaries. And in 2023, Cyberpunk 2077: Phantom Liberty used Respeecher’s technology to resurrect a Polish actor who had died between the release of the original game and the expansion. In all of these examples, the producer obtained permission from the estates and families of the deceased.

One of the most impactful near-term applications of AI voice synthesis is in localization and dubbing. AAA games often include thousands of lines of recorded dialogue, requiring substantial time and budget to produce. Localizing this content for international markets compounds the cost linearly. That is an O(n) scaling problem, where each additional language introduces a proportional increase in expense and logistical complexity. With the growth of global digital distribution, studios are now shipping games to more regions than ever before, making efficient localization a critical bottleneck.

AI-generated voices offer a promising alternative. By combining text translation with high-quality voice cloning or synthesis, studios can scale dialogue recording to multiple languages more affordably while preserving consistent tone, character identity, and delivery across markets. This could significantly reduce costs, speed up localization pipelines, and improve accessibility for global players.

Looking further ahead, AI voices play a foundational role in enabling generative dialogue systems. Technologies like Inworld and Convai are already being used to generate character dialogue dynamically, adapting to player behavior, tone, and context in real time. When paired with natural-sounding AI speech synthesis, this opens the door to truly interactive NPCs capable of unscripted conversations, branching narratives, or even emergent quests. Pairing that with authentically human-sounding voices opens up new types of player experiences and ways of connecting with NPCs.

Testing & Quality Assurance (“QA") (Percent of Budget: 10-20%)

Quality Assurance in game development is traditionally handled by a combination of in-house QA teams and outsourced partners. For large games and AAA studios with bigger budgets and more content to test, it is very common to have in-house QA leadership and a small team and outsource work during crunch times nearer to the end of the development cycle.

Ideally, testing would follow a continuous integration/continuous delivery (CI/CD) model, as seen in modern software engineering. This would involve incremental testing of small, testable content slices throughout development. However, in practice, many studios still rely on infrequent, large-scale QA sweeps. This leads to bugs being discovered late in the cycle, when fixes are more costly and disruptive to implement. That then leads to either release delays to fix the bugs, or else shipping a buggy game, getting poor early reviews, and pushing out a post-launch patch to fix the major issues and hope the game recovers (see: many poorly optimized PC ports).

When bugs make it into the final release, the consequences can be severe. A high-profile example is Cyberpunk 2077, which launched in December 2020 with widespread performance issues and game-breaking bugs. Developer CD Projekt Red allocated over $50 million for refunds and suffered a 40% drop in stock value post-launch. The backlash included a class-action lawsuit from investors and a substantial long-term hit to the studio’s reputation. The game’s reputation has since recovered after extensive post-launch updates.

While some studios survive these missteps (e.g., the Elder Scrolls franchise, whose bugs are often perceived as part of the charm), others experience significant commercial and reputational damage. In a media environment driven by early reviews and first-week impressions, launching with unresolved technical issues is increasingly risky.

So why aren’t studios better at finding and fixing bugs? It is because a human-led QA effort will always be flawed for three reasons:

QA teams have finite budgets, which means they might not find every game-breaking state in time.

Even a well-resourced QA team’s time is at the mercy of the rest of the development team (in other words, you can’t test what you can’t play yet).

Even when bugs are found and reproduceable, it is not always possible to address all of them before the release window. You end up needing to ruthlessly prioritize which to fix and which to leave. This inevitably means some bugs will be left unfixed.

Near-Term Outlook

One of the most promising advancements in quality assurance is the use of autonomous agents that can play games without human intervention. These agents simulate a wide range of gameplay scenarios, helping to identify bugs earlier and prioritize the most critical issues. This enables a shift from batch testing late in the cycle to more continuous, automated validation throughout development.

There are two major approaches in this space:

Regression-based agents are trained on specific gameplay conditions. For instance, an agent might be trained to win at StarCraft by optimizing its actions over hundreds of simulated playthroughs. These models excel at task-specific optimization but are limited by their need for extensive training data and lack generalization – they cannot easily adapt to unfamiliar games or changing contexts.

Reasoning-based agents are designed for broader adaptability. Instead of learning fixed strategies, they interpret the game environment semantically and make decisions based on scene-level understanding. This enables them to engage with unfamiliar games or content with little or no prior training.

A notable example of this approach is nunu.ai, which combines human-authored test plans with autonomous agents that can execute them dynamically, without bespoke training. This hybrid model aligns with existing developer workflows while unlocking new efficiencies. In the near-term, such tools can enhance value within the typical 10–20% of development budgets allocated to QA. More importantly, early bug detection improves launch-day quality, which directly impacts reviews, sales performance, and long-term studio reputation.

In the longer term, many of the same technologies that are being pursued for QA agents may also find their way into broader NPC behavior. If an agent can play Call of Duty to look for bugs, why can’t it also play a real Call of Duty match against a human? A “bot” is the term used for non-player characters that are designed to be played against in the absence of human players. These are not historically AI-based but instead a combination of goal-oriented planning and decision trees that tell the bot how to navigate the environment and how to win. True autonomous planning is a newer concept and will lead to more engaging non-player experiences.

A Note on Hardware

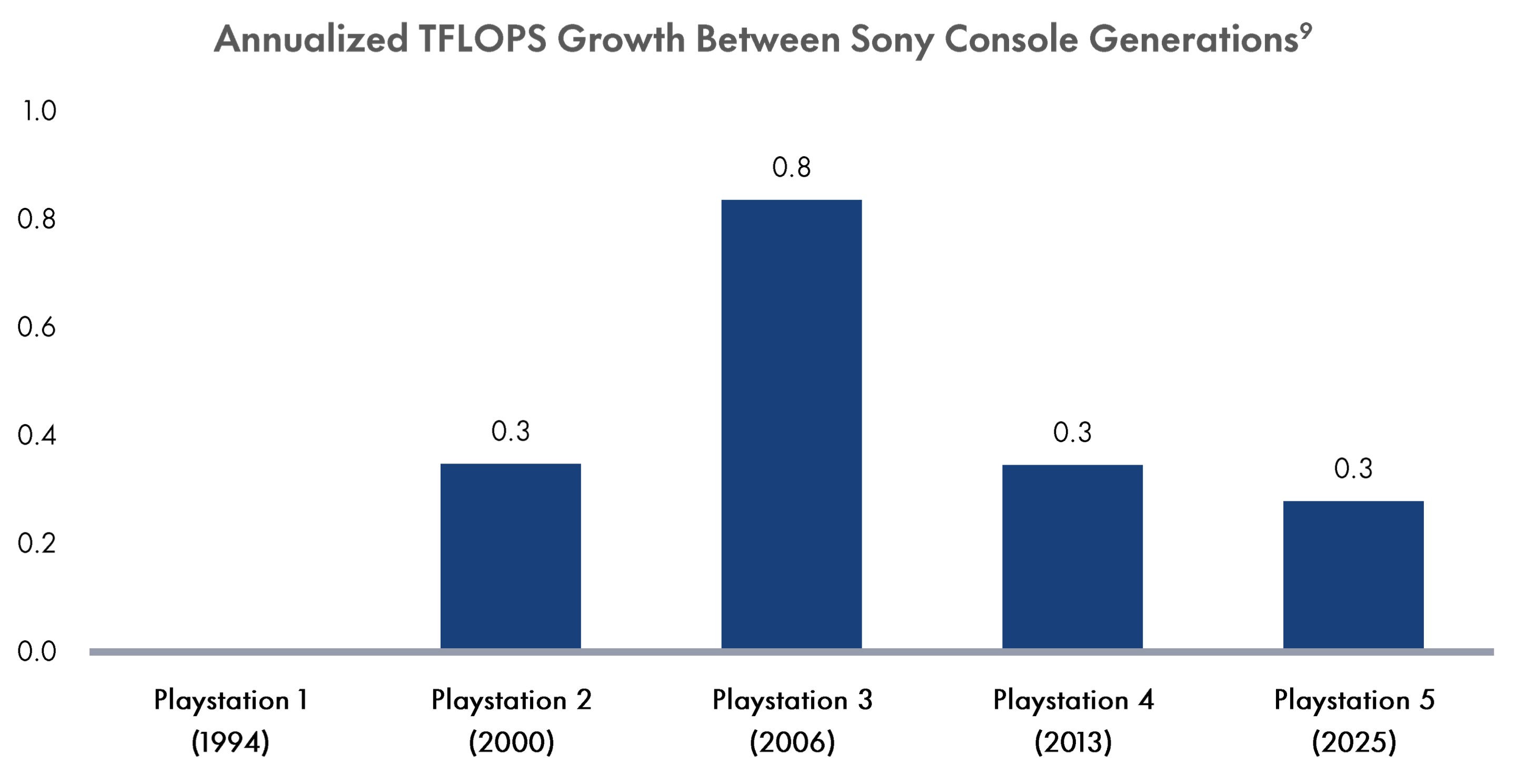

Console and PC hardware have consistently evolved to enable better graphics and more resource-demanding games. The CPU generally handles tasks like physics, game logic, and behavior, while the GPU handles graphics – rendering, shaders, lighting, ray tracing, and so on. The standard metric for measuring GPU capability is “TFLOPS” or “Tera Floating Point Operations Per Second.” It measures how many trillion floating point calculations can be performed per second, essentially making it a proxy for the GPU’s horsepower. For several decades (pre-2010), Sony, Microsoft, and to a lesser extent Nintendo were playing a game of “who can have more TFLOPS in their console” to support more complex 3D graphics. However, the hardware needed to support this performance requires massively increasing heat dissipation and energy. For context, the PS5 draws an average of 210 watts, which is about the same as 2 mini fridges and half a modern PC GPU. The PS5 is the largest video game console in history, but it is still only about half the size of a modern 50-series NVIDIA GPU. You simply can’t increase the performance output of a modern console without increasing size, or heat signature, or noise, or all three. This tradeoff is why we see console TFLOPS improvement slowing over time.

Figure 6: TFLOPS growth slowing each generation

Figure 7: Shown another way – TFLOPS growth between successive Sony consoles peaked between PlayStation 2 PlayStation 3 and has continued to decline with each console since

The future of console performance isn’t more TFLOPS. It is using specialized cores that handle very specific AI-powered operations that let you do more with fewer TFLOPS. The Switch 2 is technically less “powerful” than the 12-year old PS4, but its games look just as good, if not better. Why? It’s because the Switch 2 uses hardware that supports ray tracing and DLSS (a type of ‘neural rendering,’ which uses AI to upscale images), but the PS4 did not. This means the Switch 2 can render lower resolutions that use AI to upscale, so you get both top-tier picture quality without trading off battery life or frame rate.

To support continued AI deployment at runtime, expect hardware to continue to push into enabling AI-specific efficiencies rather than pushing more compute power. Near-term innovations in neural rendering beyond DLSS are on a lot of peoples’ minds in the industry right now. This is especially true where it relates to synthesizing frames (to increase frame rate) or AI-powered lighting synthesis that may completely replace computationally expensive ray tracing. Other AI applications such as NPC bots or language models for real-time dialogue may also be squeezed into console hardware over time as both the models get smaller as well as hardware develops in a way that enables these models more efficiently.

Tying it All Together

In the near-term, AI will produce tremendous cost savings in the games and media industry and reduce development cycles to get more great content out the door quicker. In the longer- term, AI has the potential to be transformative.

Near-term cost savings = take time and expense out of the budget by automating tasks like QA and Test (up to 10-20% budget savings) or art (up to 10-30% budget savings). This is quantifiable savings and it’s easy to for a production director to understand.

Transformative = much longer-term, much harder to quantify, but much more evolutionary. When more of these technologies mature, then they will work together. Consider several of the technologies touched on earlier in this report and representative companies:

NPC behavior and planning (Artificial Agency)

Generative dialogue (Inworld)

Generative 3D characters (via asset generation like Spline, or video generation like Genvid)

Generative animation (Uthana)

Generative audio (ElevenLabs)

Hardware that enables all of this to work on your device/console/phone

When all of these elements work together in production, and you have narratives and characters for which you provided the guardrails but did not hard-code, then you end up with truly emergent experiences and worlds that actually feel responsive and alive. We don’t have that today, but we will. And that market may be much bigger than today’s games.

References

1 GameDev Reports, “Video Game insights: The Big Game Engine Report of 2025,” February 10, 2025.

2 Kotaku, "The Story Behind Mass Effect: Andromeda's Troubled Five-Year Development," June 17, 2017.

3 CG Channel, "Unity Scraps Controversial Runtime Fee but Raises Prices," September 13, 2024.

6 PC Gamer, "31% of Game Developers Already Use Generative AI," January 18, 2024.

8 u/VoiceActing, "Has Your Work Been Affected by AI?" Reddit, June 25, 2024.

9 GameSpot, "Console GPU Power Compared: Ranking Systems by FLOPS," November 3, 2017.

Disclaimers

Third Point LLC ("Third Point" or "Investment Manager") is an SEC-registered investment adviser headquartered in New York. Third Point is primarily engaged in providing discretionary investment advisory services to its proprietary private investment funds, including a hedge fund of funds, (each a “Fund” collectively, the “Funds”). Third Point also manages several separate accounts and other proprietary funds that pursue different strategies. Those funds are not the subject of this presentation.

Unless indicated otherwise, the discussion of trends in the above slides reflects the current market views, opinions and expectations of Third Point based on its historic experience. Historic market trends are not reliable indicators of actual future market behavior or future performance of any particular investment or Fund and are not to be relied upon as such. There can be no assurance that the positive trends and outlook discussed in this topic will continue in the future. Certain information contained herein constitutes "forward-looking statements," which can be identified by the use of forward-looking terminology such as "may," "will," "should," "expect," "anticipate," "project,“ "estimate," "intend," "continue" or "believe" or “potential” or the negatives thereof or other variations thereon or other comparable terminology. Due to various risks and uncertainties, actual events or results or the actual performance of the Fund may differ materially from those reflected or contemplated in such forward-looking statements. No representation or warranty is made as to future performance or such forward-looking statements. Past performance is not necessarily indicative of future results, and there can be no assurance that the Funds will achieve results comparable to those of prior results, or that the Funds will be able to implement their respective investment strategy or achieve investment objectives or otherwise be profitable. All information provided herein is for informational purposes only and should not be deemed as a recommendation or solicitation to buy or sell securities including any interest in any fund managed or advised by Third Point. All investments involve risk including the loss of principal. Any such offer or solicitation may only be mode by means of delivery of an approved confidential offering memorandum. Nothing in this presentation is intended to constitute the rendering of "investment advice," within the meaning of Section 3(21)(A)(ii) of ERISA, to any investor in the Funds or to any person acting on its behalf, including investment advice in the form of a recommendation as to the advisability of acquiring, holding, disposing of, or exchanging securities or other investment property, or to otherwise create on ERISA fiduciary relationship between any potential investor, or any person acting on its behalf, and the Funds, the General Partner, or the Investment Manager, or any of their respective affiliates. Specific companies or securities shown in this presentation are for informational purposes only and meant to demonstrate Third Point's investment style and the types of industries and instruments in which Third Point invests and are not selected based on past performance.

The companies highlighted in this presentation are for illustrative purposes only. There can be no assurance that a Fund implementing the TPV strategy will make an investment in all or any of the deals or on the terms estimated herein. As a result, this presentation is not intended to be, and should not be read as, a full and complete description of each investment that the TPV strategy may make. The analyses and conclusions of Third Point contained in this presentation include certain statements, assumptions, estimates and projections that reflect various assumptions by Third Point concerning anticipated results that are inherently subject to significant economic, competitive, and other uncertainties and contingencies. There can be no guarantee, express or implied, as to the accuracy or completeness of such statements, assumptions, estimates or projections or with respect to any other materials herein. A broad range of risks could cause actual investments to materially differ from the TPV Deal Pipeline, including a failure to deploy capital sufficiently quickly to take advantage of investment opportunities, changes in the economic and business environment, tax rates, financing costs and the availability of financing, selling prices, regulatory changes and any other unforeseen expenses or issues. Third Point may buy, sell, cover or otherwise change the nature, form or amount of its investments, including any investments identified in this presentation, without further notice and in Third Point's sole discretion for any reason.

Except where otherwise indicated herein, the information provided herein is based on matters as they exist as of the date of preparation and not as of any future date, and will not be updated or otherwise revised to reflect information that subsequently becomes available, or circumstances existing or changes occurring after the date hereof. There is no implication that the information contained herein is correct as of any time subsequent to the date of preparation. Certain information contained herein (including financial information and information relating to investments in companies) has been obtained from published and non-published sources prepared by third parties. Such information has not been independently verified and neither the Fund implementing the TPV strategy nor Third Point assumes any responsibility for the accuracy or completeness of such information.

This presentation may contain trade names, trademarks or service marks of other companies. Third Point does not intend the use or display of other parties’ trade names, trademarks or service marks to imply a relationship with, approval by or sponsorship of these other parties.